Can OpenAI write programs on its own?

Posted 1 year ago by Martin Dluhos

If you’ve been following the news in the IT world, you’ve surely heard about OpenAI. For the past few months, this company has made headlines with its excellent AI language models. OpenAI is best-known for ChatGPT, a user-friendly, chatbot-like tool that’s being used to simplify the content creation process. Beside ChatGPT, their offering also used to include the now deprecated Codex API, which served not only for code generation, but also for manipulating existing code. Some companies already use a variant of the Codex model in their tools. For example, GitHub’s Copilot uses Codex extensively to provide code suggestions during software development.

All of this got us thinking—if ChatGPT can make content creation easier, could Codex streamline the process of writing programs? Being a software company, we decided to evaluate Codex by using it to create a simple CLI tool that queries Google Calendar API to show the current availability status of the 3 meeting rooms in our Ostrava office. Even though Codex API has been recently deprecated, it’s still a useful case study demonstrating what OpenAI models are capable of when it comes to generating code. Read on to learn more.

Getting started with code generation

The easiest way to get started is by using the OpenAI playground directly in a browser, which is available after you sign up. Since we are working with code instead of text we choose Codex’s code-davinci-002 as our completion model. We start off with the following simple prompt:

# Python 3 # Using Google Calendar API, get the list of all my Google calendars # and print the calendar names and their IDs.

Lo and behold, OpenAI generates an entire Python script on the first try. Does it run? Well, first we need to install the required packages. Which ones do we need? We can look at the imports or simply ask OpenAI:

# Which python packages do I need to install # for these imports to succeed? # Code with imports: ``` from apiclient import discovery from oauth2client import client from oauth2client import tools from oauth2client.file import Storage ``` # Answer: The packages required for these imports to succeed are: - apiclient - oauth2client - google-auth-oauthlib

With the necessary packages installed, the script runs successfully on the first try, and lists the Google calendars from my account:

Birthdays addressbook#[email protected] Holidays in Czechia en.czech#[email protected] [email protected] [email protected] Ostrava Office-3-POPs MTG [email protected] Ostrava Office-3-Back MTG [email protected] Ostrava Office-3-Main MTG [email protected]

All the code in the script was generated from the simple prompt given above. We only had to provide a credentials file to authenticate against the API and name it appropriately. We did not, however, need to change a single line in the generated code.

Implementing logic via code editing

What’s next? We have a working script, but it does not tell us anything about the availability status of our 3 meeting rooms. We need to iterate on our script. To do this, we need to edit the existing code. OpenAI provides the code-davinci-edit-001 model, so we used that. This model takes two inputs: existing code and instructions to modify it. We noticed that the authentication library used in the script is already deprecated, so we tell Codex to replace it:

Use google's auth library instead of oauth2client.

It succeeds again producing code with the same functionality, only modified to use the google-auth-oauthlib library.

Next, we want to fetch only meeting rooms, which are labeled as resources in Google Calendar:

Modify the code above to get the list of all my Google calendars

which are resources.

Codex adds some filter parameters to calendarList(), but they do not narrow the query down to the desired meeting rooms. After a few more trials, we notice that the 3 meeting rooms have “resource” in their IDs:

Modify the code above to get the list of all Google calendars

which have the string 'resource' in its id.

This time, it works. Next, we need to figure out whether the meeting rooms are currently busy. When we ask about occupancy, Codex generates code which references a nonexistent busy calendar field. We looked at the documentation and found that we need the “freeBusy” query. We try again:

Modify the code above to show if each of the calendars is currently free or busy using freeBusy query.

Codex generates an API call for the query, including guessing reasonable query parameters. It guesses that we are interested in knowing whether the rooms are free or busy in the next 30 minutes, which is a very reasonable assumption. For each room, it prints either “busy” or “free”. We then modify the script to show the end time of the current event in case the room is “busy”:

Modify the code above to show until what time the calendar is busy.

Now that we have the core functionality working, let’s put some final touches on the format and appearance of the printed output. We remove the repeated string from the meeting room name and print the end time without the date, which is always the present day.

We’ll make the output look prettier by color-coding the printed info. We actually needed multiple tries to get the intended output, we actually needed multiple tries. It finally worked with the following prompt:

Modify the code above to colorize the free and busy information.

Make sure the calendar entry summary is never colored.

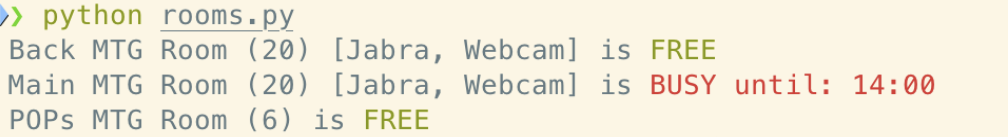

Here’s the nicely color-coded output of our script showing the current availability status of each of profiq’s 3 meeting rooms:

Refactoring and documenting

The script is working as we intended. But what about its code? All the logic is in one main() function. Let’s make it more readable:

Refactor the code in `main()` function in the script above

into multiple functions.

When it comes to refactoring, we surprisingly achieved the most sensible result with text-davinci-003, which is a general model for natural language processing. It created functions authenticate(), get_calendar_list(service), and check_availability(service, calendar_list_entry). Now that the code is in good shape, how about adding some documentation? Codex can help with that, too:

Create an elaborate, high quality docstring for this Python script.

The output description is surprisingly concrete, pointing out the credentials.json as a needed prerequisite. To generate docstrings for the individual functions, we were inspired by the nvim-magic plugin for a descriptive prompt:

# Write an elaborate, high quality docstring This is an example of writing an elaborate, high quality docstring that follows a best practice for the given language. Attention is paid to detailing things like * parameter and return types (if applicable) * any errors that might be raised or returned, depending on the language I received the following code: ```python {{ code }} ``` The code with a really good docstring added is below: ```python

OpenAI generated a valid docstring for each function with an accurate description. However, the descriptions for two of the functions were quite general and not detailed enough. For a really informative docstring, we would need to add that get_calendar_list() returns only “resource” calendars, which are calendars for our meeting rooms. Furthermore, the docstring for check_availability() does not mention that it not only checks the availability of a calendar (meeting room), but also prints information about it to the console. While the docstring is not as informative as we’d like, it’s really easy for a developer to fill in the details manually with the bulk of the docstring content already in place.

We should note that during our code experiments in OpenAI playground, we did not alter any of the model’s default parameters with the exception of “maximum length”, so that the output does not get cut off.

Writing unit tests

Our code seems to work, but let’s add some unit tests to check its robustness. Unfortunately, Codex models didn’t create any useful tests when we asked. In one response, for example, Codex just went on printing imports from unittest.mock in a loop until it reached the maximum token length. On another try with the code edit model, it did create test classes, but without any useful assertions. When Codex failed us, we decided to look to ChatGPT for help. We simply asked:

Write a unit test for this Python 3 function

and inserted the check_availability() function after the prompt. This time, we were very impressed with the answer. We continued by asking it to test the same function, but with different data and thus, different expected output:

Please generate another test which tests when a calendar is busy.

Next, we tried testing get_calendar_list(). We needed to make two minor modifications in test_get_calendar_list() which took us a minute. Finally, we also generated a test for authenticate(), which ChatGPT figured out on the third try. Eventually, all four tests run and pass successfully. While these tests do not cover all possible scenarios, we have at least one test for each function and no logic remains untested.

As the icing on the cake, we asked ChatGPT to create a README file for the script:

Create a README file description for the script above please.

You can judge for yourself how it handled the job. Notice that it seems to recognize its own code.

Evaluation

We started from scratch and now have a working Python script that prints information about the availability of profiq’s meeting rooms. How useful was OpenAI’s Codex during the script’s development? One could say, “Well, this is not a sophisticated program with complicated logic. What’s the added value of using Codex instead of writing this myself?” In our opinion, there are a number of benefits.

First of all, we did not need to study Google Calendar API’s documentation during the script’s development except when we looked up the freeBusy query. That’s a really big time-saver, as we relied on Codex’s knowledge of the API functions and their parameters and return values instead. Furthermore, we did not need to search for Python libraries to authenticate against the API or to colorize the output and spend time studying how to use them. Codex generated valid code that utilizes those libraries with us explicitly mentioning them. All we needed to do was to add those libraries as dependencies in our project.

We also did not need to remember the Python function to remove a substring or to add a time interval to a datetime. While these are well-known to a seasoned Python programmer, they might be new for a novice. In summary, we saw time saving benefits to help with calling unknown APIs or using new libraries for experienced programmers. To a less experienced developer, Codex also provides opportunities to learn how to use functions which are part of Python’s standard library.

That being said, Codex did not write the script itself. We still needed to understand the specification of the program to be implemented and learn to write a good prompt. We also needed to judge whether the whole script generated or edited by Codex works according to our expectations.

Furthermore, there were cases when Codex did not generate code that fully satisfied our expectations, or generated code which needed to be manually edited and improved. In such cases, knowledge or at least familiarity with Python was necessary. We should also note that OpenAI models were trained on data available prior to September 2021, so new libraries or library updates published later are not known to it.

Conclusion

The version of Codex we used only accepted input up to 8000 tokens. So, what worked really nicely for our small script, which we could send as input in its entirety, cannot necessarily be applied to large and complex codebases.

Codex was not conversational and thus did not hold context the way ChatGPT does. Thus, Codex was useful for generating and editing code, while ChatGPT’s strength during development is in explaining code and answering questions about it. We found that ChatGPT is particularly useful for learning how to use a new library or explore new API.

Despite its limitations we were very impressed with Codex’s capabilities, which have surpassed any code-assistance tools we have used thus far. Since ChatGPT can be queried via an API now, it can be used to generate and manipulate code in a way that’s similar to Codex. New versions of ChatGPT have been released since this case study was conducted that have improved on previous, publicly available OpenAI models. And that’s not all. OpenAI models keep improving at an increasing rate and similar models are being rapidly developed by other big tech companies. We’re just getting started.

Thank you for the intriguing article! OpenAI’s autonomous programming skills are a testament to its potential. Impressive work