Deploying and Scaling Elixir Apps: Heroku vs Fly.io

Posted 9 months ago by Martin Dluhos

Phoenix is a popular Elixir web framework for building scalable, high-performance web applications. If you are building a large-scale Phoenix-based application platform, then you would most likely have a DevOps person or a DevOps team to deploy it in GCP or AWS with a Kubernetes orchestration engine. If you’re developing your app on your own or within a small team of developers, however, such cloud platforms and tools are unnecessarily sophisticated and complex for your needs. In this article, we will explore and compare two platforms that make the daunting task of deploying a Phoenix application simpler: Heroku and Fly.io.

Both platforms provide a streamlined interface for deploying and managing Phoenix applications, so developers can build their application without a dedicated Ops team. First, we will walk through the steps to deploy an example Phoenix application, and point out challenges along the way. Then, we’ll compare the benefits and limitations of each, focusing primarily on their scaling capabilities. For our example application, we’ll use Phoenix LiveView Demo.

Heroku: The go-to solution

Since Heroku has historically been the obvious choice for app developers to deploy their apps, we’re starting our test drive with it. The recommended way to deploy an app in Heroku is via buildpacks. A buildpack is a set of scripts specific to a language and framework that assemble the application into a slug, which is a bundled application ready for execution. Buildpacks have been developed for many languages and eliminate the need to write and maintain a Dockerfile, which simplifies the deployment process.

Unfortunately, Elixir is not officially supported by Heroku. Despite this, we can still deploy our app using a community buildpack. Besides the initial signup, all deployment steps can be performed with a Heroku CLI tool. First, we created the application using the buildpack. Next, we created the database with a Postgres add-on to the app. Finally, we deployed by pushing our project to Heroku git remote which we set up earlier. If all goes well, the app should be live. While following the Phoenix documentation for deploying on Heroku, we ran into a few issues. The first issue we encountered was a library compilation error:

/app/.platform_tools/erlang/erts-10.2.3/bin/beam.smp: error while loading shared libraries: libtinfo.so.5: cannot open shared object file: No such file or directory

It turns out that the default Heroku stack used by the Elixir buildpack causes this error regardless of which versions of Elixir and Erlang are used. We had to downgrade to Ubuntu 20.04 stack to get past this complication error. Another issue occurred after deploying the app:

Error R10 (Boot timeout) -> Web process failed to bind to $PORT within 60 seconds of launch

After some searching, we found another GitHub issue which recommended we add this line to the Phoenix endpoint configuration:

http: [port: {:system, "PORT"}]

We really needed to dig deep into GitHub issues to figure out how to resolve the errors above. This shows us that Elixir is indeed not a first-class citizen on Heroku.

Scaling on Heroku

By default, we have only one instance of our app up and running. What happens if our app goes viral and we start receiving lots of requests? Heroku VMs, called dynos, can be scaled horizontally by adding more instances, as well as vertically, by adding more resources to each instance. However, to scale to more than one instance, we need to upgrade to at least the Standard payment plan, for which the price per dyno is almost four times higher! But that’s not all. What if you want your app to scale automatically instead of adding instances manually? Heroku does support autoscaling, which can be configured through the Dashboard UI. When we tried to turn it on, however, the following message displayed:

Item could not be updated: Scaling app liveview-demo failed. Access to performance-m and performance-l dynos is limited to customers with an established payment history. For more information, see https://devcenter.heroku.com/articles/dyno-types#default-scaling-limits

So, Heroku does support autoscaling, but only under certain conditions. Not only do you need to upgrade to an even higher payment plan, but you apparently also need to have an existing payment history; so, no fun for our app in our newly created account. ?

How about deployment locations? The deployment region is chosen at app creation. By default, Heroku only offers two options: eu and us. For more advanced (and more expensive) private runtime plans, there are six city locations around the world. Although you can migrate an app to a different region, Heroku does not currently offer the option to scale a deployed app to more than one region.

Fly.io: The new kid in town

Fly.io is another platform-as-a-service for making deploying apps easier. From our first glance at Fly.io’s documentation, it is clear that Elixir Phoenix is at the forefront of supported frameworks. As with Heroku, Fly.io also knows how to use buildpacks to build and package the project’s source code, so Elixir developers don’t need to create any special deployment configuration files. There is, however, always an option to add a custom Dockerfile if you want to have more flexibility and control.

Similar to Heroku, Fly.io provides a CLI tool, flyctl, to interact with its platform. Once you’ve logged in and are equipped with flyctl, only a single command is needed to deploy the app: fly launch. During the guided deployment, we chose our app name, deployment region, and set up a database. When the process completes, we are ready to access our running instance. During the build process, Fly.io also autogenerates two new files: a Dockerfile used for deployment and fly.toml, with application’s default configuration for Fly.io.

There were a couple of configuration changes we needed to make in the Phoenix project for the deployment to succeed. Those changes had to do with configuring the Ecto.Repo to use IPv6 and pointing the endpoint to the app’s URL. These were not too challenging to figure out, as there is an example Phoenix project available on GitHub with a working configuration for deployment on Fly.io.

Thus far, Fly.io seems to be very similar to Heroku. So, what sets it apart? Fly.io’s CEO Kurt Mackey claims that the platform empowers apps to run close to the users’ location via multi-regional deployments. How does it work in practice? How difficult is it to set up a multi-regional deployment and how is it configured?

Scaling on Fly.io

When executing fly launch, we were prompted to select a deployment region from 26 locations around the world. Once the first instance is up and running, it’s easy to add additional regions via fly regions add <region-name>. It is also possible to configure the number of app instances running using fly scale count <number-of-instances>. Fly.io will then go ahead to distribute those instances across the defined regions by itself. That’s really neat! ?

What’s more, Fly also supports autoscaling. You specify the minimum and maximum number of application instances, and the platform takes care of the rest based on the scaling criteria. It’s very straightforward to configure using the CLI; you don’t need to know how to configure Kubernetes, and it takes a few seconds to be applied. In contrast to Heroku, we did not encounter any limitations imposed by our payment plan or payment history.

Let’s see how well autoscaling across regions works in practice. Using this simple command, we defined the maximum and minimum number of app instances:

fly autoscale set min=1 max=4

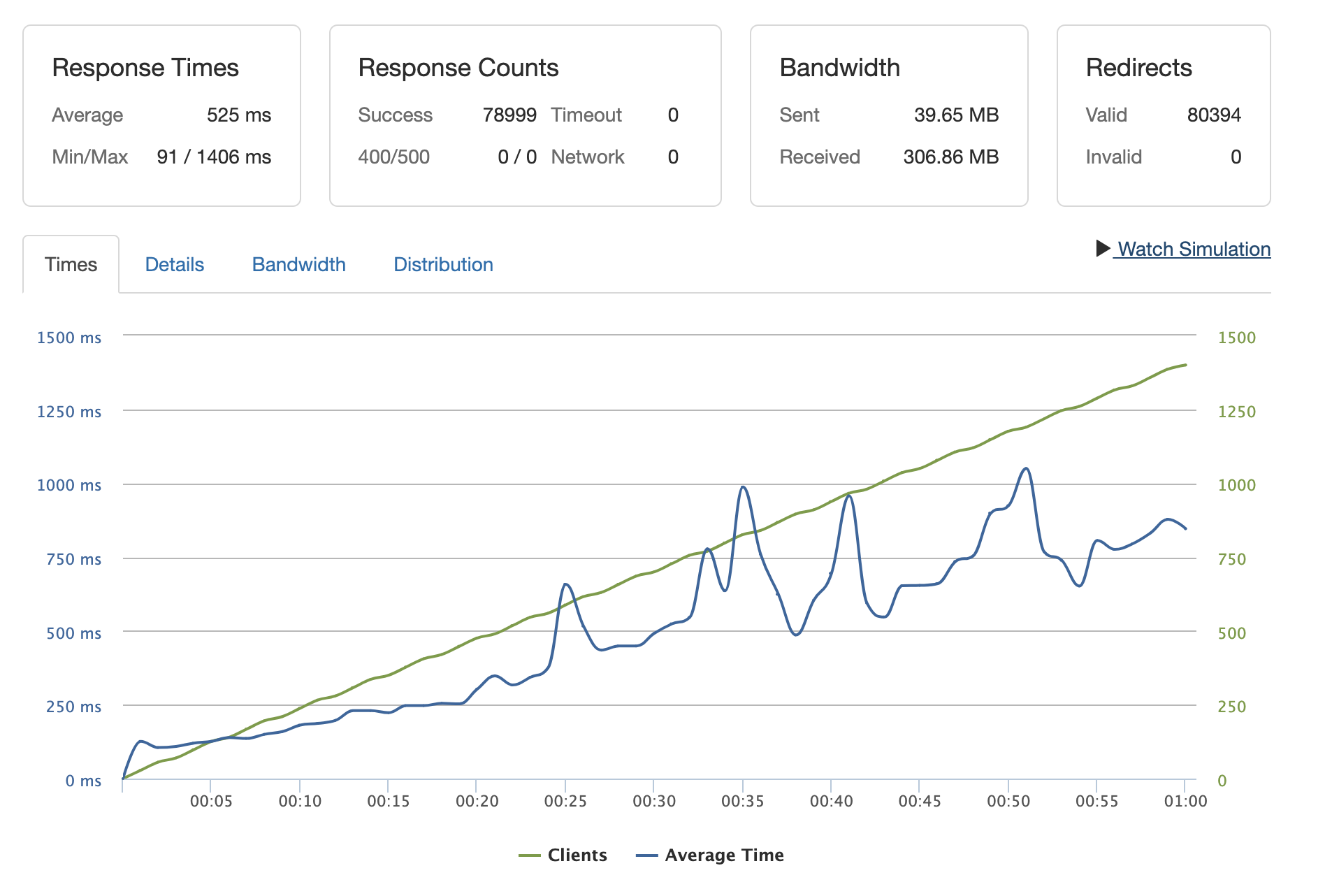

To test autoscaling on our app, we used an online load-testing service, loader.io, to create hundreds of simultaneous connections. We executed a test which gradually incremented the number of client connections from 0 to 1400:

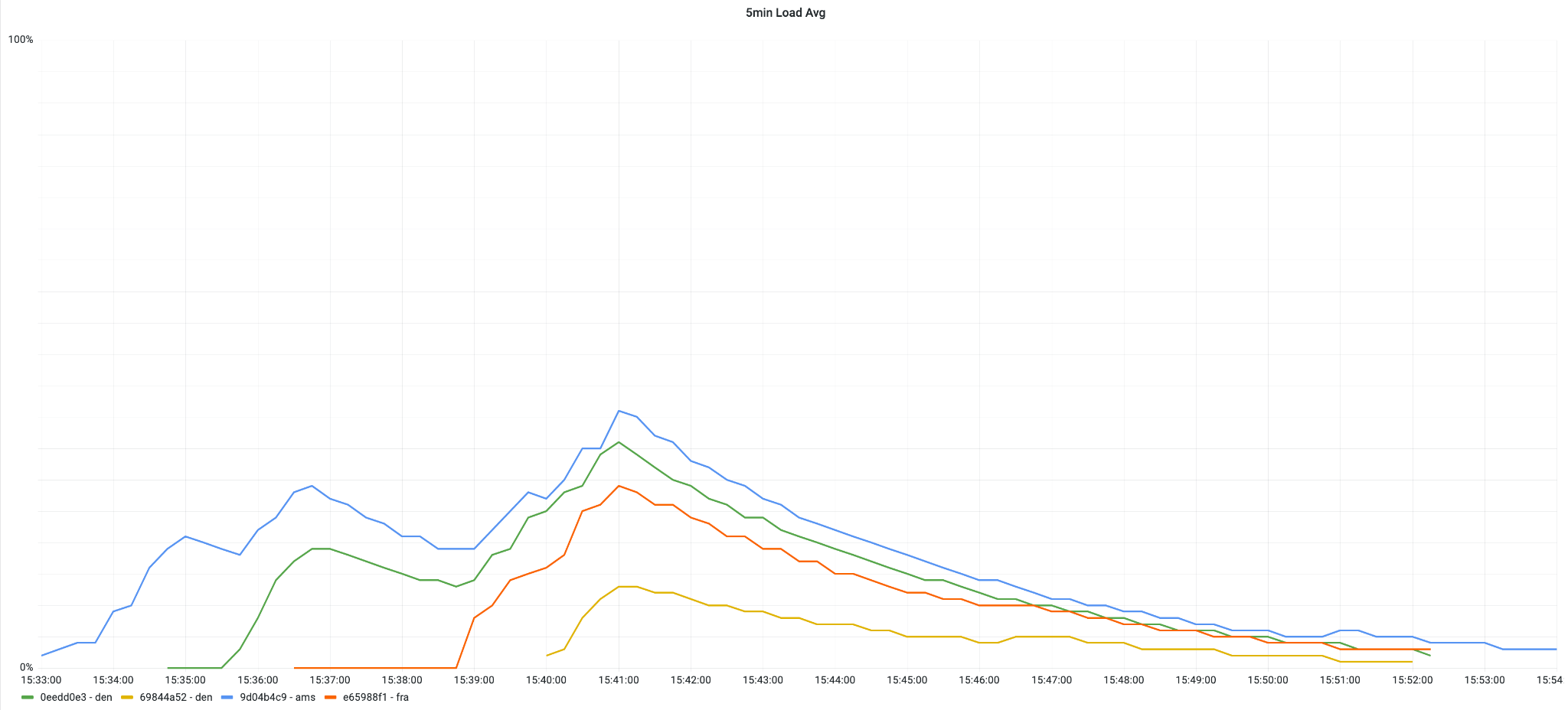

One of the nice features that Fly.io provides out-of-the-box is a Grafana Dashboard, which shows useful graphs with statistics about app instances and the underlying virtual machines. Here is a graph which which shows the autoscaling in practice:

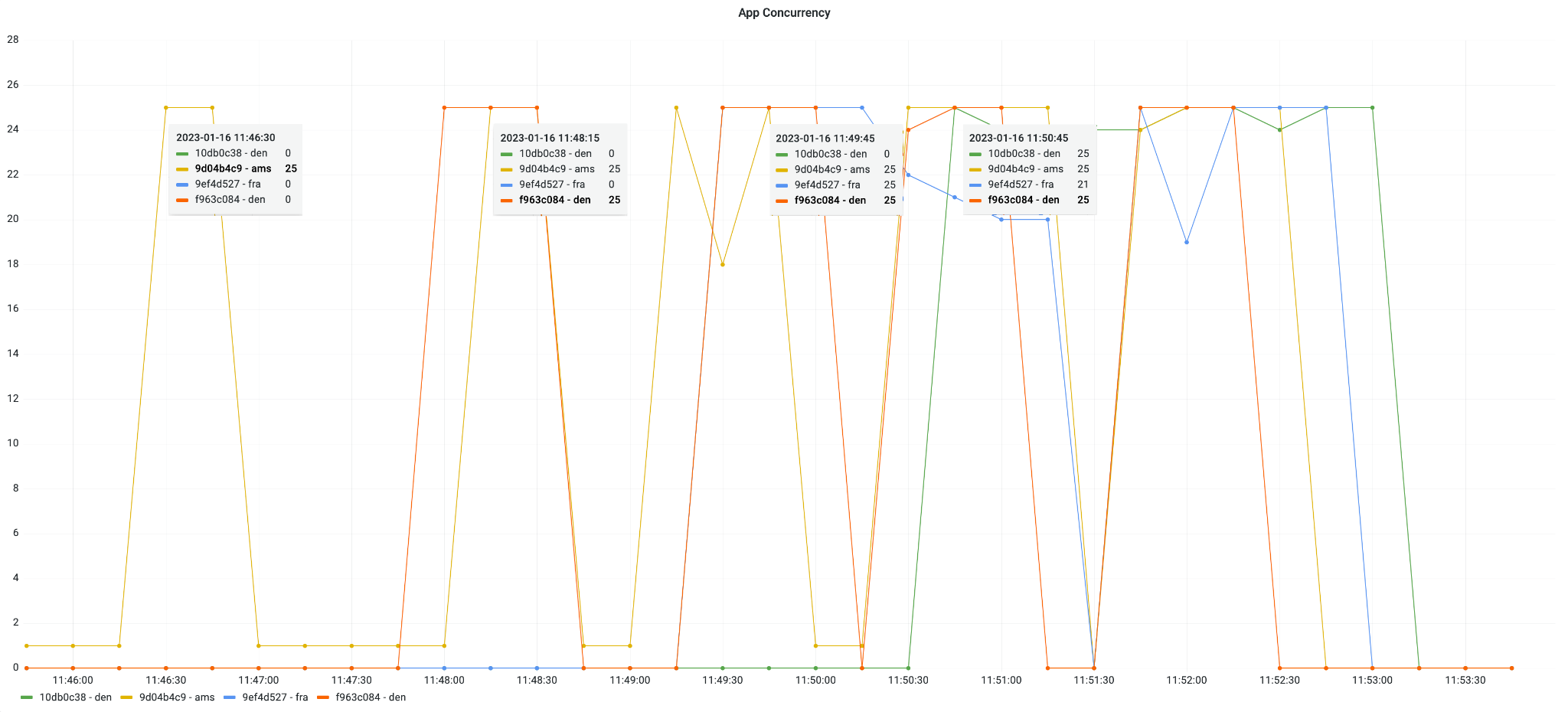

Fly.io decides how to scale the application based on the load, which is by default, determined by the number of concurrent connections. The default limit for the number of concurrent connections to one instance is 25. Note that this is just an example with a default value; we would increase the limit significantly in production.

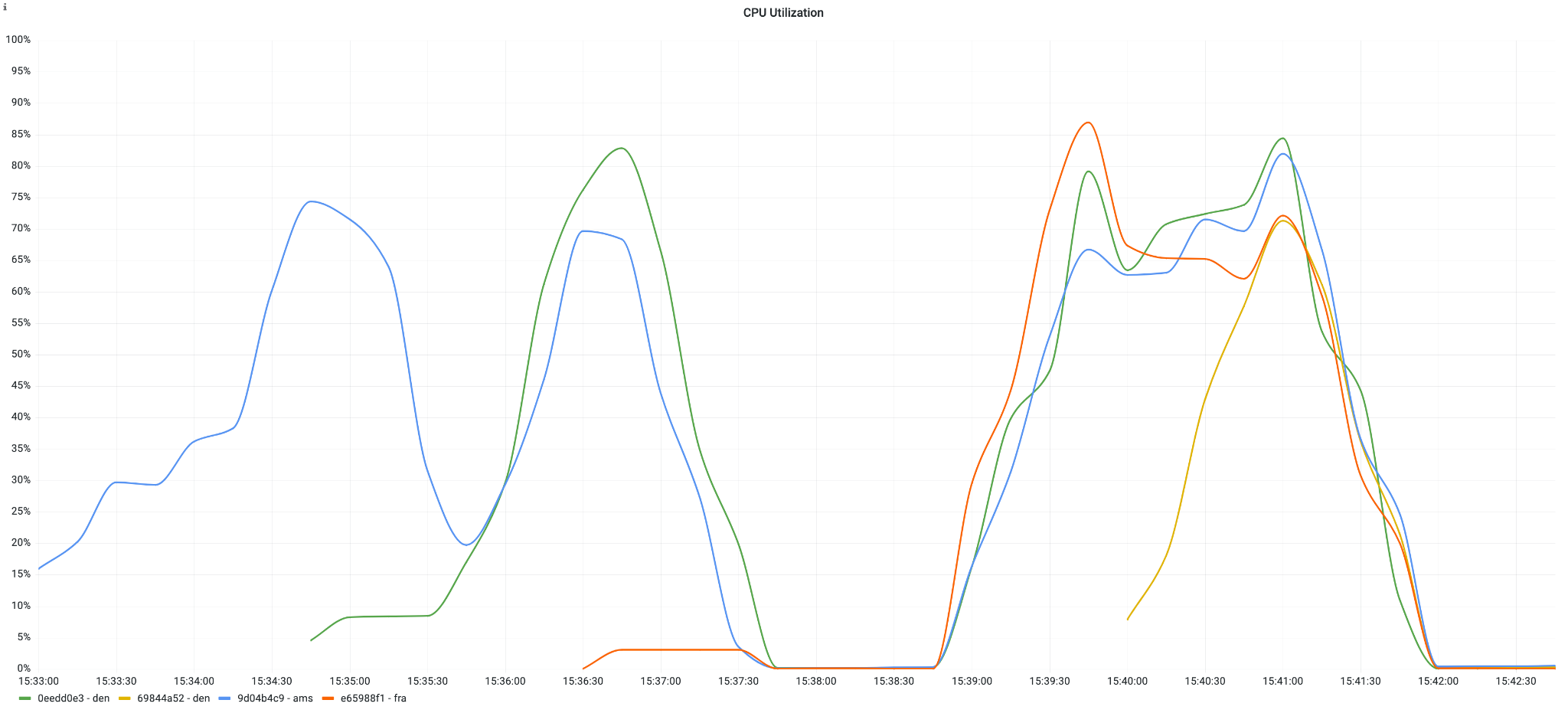

When the connection limit is reached, a new app instance is spawned to handle connections above the limit. Since we defined three regions to deploy the app, Fly.io deployed each new instance in a new region. When all regions had a running instance, a second instance was deployed in one of the three defined regions. This incremental scaling process is clearly visible in a graph from another load test:

Conversely, when the number of connections decreased, the app was scaled down. Scaling down is a more gradual process that’s triggered when the load decreases below the defined limit during a 10-minute interval, which can clearly be seen in the following graph:

After the load tests terminate, the application is scaled down to just one instance, which is the specified minimum.

Fly Postgres

During the guided deployment, we were offered an option to provision a Postgres database as well. When we accepted, Fly.io autogenerated DB credentials and created a VM instance with a running Postgres instance. It then linked the DB instance with the app instance and ran Ecto migrations.

During the load tests discussed above, we noticed the following errors in the logs as the number of client requests to the application increased:

2023-01-16T12:49:27Z app[99634228] den [info]Request: GET /users

2023-01-16T12:49:27Z app[99634228] den [info]** (exit) an exception was raised:

2023-01-16T12:49:27Z app[99634228] den [info] ** (DBConnection.ConnectionError) connection not available and request was dropped from queue after 116ms. This means requests are coming in and your connection pool cannot serve them fast enough.

Fly.io scales the database by provisioning additional database replicas. This didn’t remediate the issue, though. Instead, tuning database parameters improved performance, and we increased the maximum concurrent connections:

fly postgres config update --max-connections 1000 -a liveview-demo-db

as well as set the pool size to 50 in the app’s Ecto.Repo config. Higher pool size implies higher resource consumption, so we also increased the VM’s memory from 256 to 512 MB:

fly machine update <db-machine-id> --memory 512 -a liveview-demo-db

where <db-machine-id> is the ID of the VM instance running the database. With these adjustments, the database errors disappeared from the logs. ?

Fly.io’s docs are clear that the Postgres service is a standalone database app running on a VM rather than a managed database solution. This means that tasks such as storage and memory scaling, version upgrades, or backup management have to be done manually. Those who don’t want to deal with all these tasks can always opt for a managed database solution offered by other cloud providers.

Conclusion

To summarize, we explored how to deploy a Phoenix application on Heroku and Fly.io, both of which provide platform-as-a-service for application deployment. Both platforms provide an easy-to-use CLI tool for managing the deployment process, as well as built-in monitoring tools. Besides Fly.io and Heroku, there is a third platform called Gigalixir, specifically for deploying Elixir applications. As Gigalixir does not support autoscaling, however, we have not included it in this comparison.

When testing an example Phoenix application on Heroku, we encountered some issues during the deployment itself, and subsequently ran into limitations with its scaling capabilities. In contrast, deploying our app on Fly.io was a smooth experience, and we found that it offers several innovative features that other platforms don’t offer. One of the differentiating features of Fly.io is autoscaling, which it provides out-of-the-box without any limiting conditions. What really gives Fly.io an edge over other application platforms, however, is its built-in ability to scale applications to run across regions, which reduces latency, and thus provides a better user experience. This becomes especially useful for applications with a global user base.

Last, but not least, Fly.io offers free resource allowances for running small applications and doing experiments, while Heroku no longer offers a free option. Looking at pricing, Heroku seems to be more expensive than Fly.io by an order of magnitude for comparable services. Overall, we found Fly.io to provide a smoother and richer experience for deploying our Elixir Phoenix application, all within a small budget.