Life’s Imprints in 360 Degrees

Posted 7 years ago by Lukáš Trnka

In an earlier blog entry, we wrote about diving into virtual reality through tech research at profiq. We started by thoroughly examining VR, back when we were primarily interested in the general status of this technology. What equipment is available on the market, and what usage opportunities does it present for users? What’s the architecture of the technical solution itself, and on which platforms is it built? What are the use cases in the real world, and how can we, as software engineers, use our expertise and our own development to contribute? These are the kinds of questions we asked during this very fun and inspiring phase of getting to know virtual reality and testing its ever-expanding boundaries and shifting limits. So which area did we ultimately select for closer examination?

It is truly fascinating how many possibilities this new technology offers for engineering research, and how many facets of human life it can actually impact in the near future. One of the basic premises of virtual reality is that it can transport you to another environment than the one in which you are using it. The essence of the places you can „visit“ from the comfort of your home or office can be of a dual nature. How so?

First, these hyper-real worlds can be modelled artificially. A user enters a newly-created space of 3D objects and polygons, which offers a very high level of believability and adds a wonderful interactivity that closely approaches the way the real world behaves around us. Valve Corporation , the top among game developers, created an engaging application called The Lab, which offers a suitable example of this case. As VR users, we’re very curious as to what sorts of new opportunities artificially modelled worlds will bring to VR. It’s great that current developments indicate we have a lot to look forward to!

The second method for „teleporting“ elsewhere through your VR headset is the interposition of experiences and recorded images from the real world. These are subsequently transmitted to your glasses and displayed there in a manner that most faithfully evokes the impression that you’re in the place where the recording was taken. Google Street View offers a good example of how this use of virtual reality allows for very believable virtual travel to places that have been recorded into the application. This domain of „life’s imprints in 360 degrees“ has brought a whole new dimension to 360° photographs and especially 360° videos, which bring precisely this to users.

The transmission of video content to VR glasses and the display of this content just became the subject of profiq’s closer, more detailed research. We’ll cover this topic in several other blog entries along with this one.

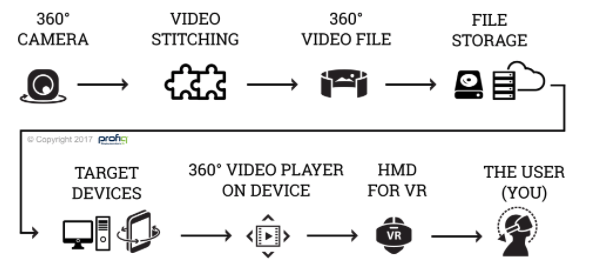

How does the transmission of 360° videos actually work? We’ll start with a simple overview of the most essential conditions that fundamentally come into play when trying to understand the process from recording a 360° video to playing it in your VR glasses. In the infographic below, you can identify the major milestones encountered in a closer look at the journey of a video from the camera all the way to your headset. Although it’s a simplified diagram, it’s clear that this process encompasses a wide range of topics that software developers can explore. So which of these did we choose, and why? Which of these topics impressed our developers? Follow our blog and soon you’ll find out more!

[…] At profiq, we’ve been intrigued by the possibilities of live streaming and have been actively pursuing it since 2015. You can read about our ventures into 360° video and VR streaming here How we delved into alternate reality, and why and here Life’s imprints in 360 degrees. […]

[…] We are also interested in researching nascent concepts that may have potential for future developments. “We are currently researching the science behind computer-generated speech,” said Milos, referencing the “speaking piano” as one example of synthesized speech. Past projects have included options to project 360-degree videos on mobile devices. […]